This article orginally appeared on Scape Technologies' Medium page.

Designing Large-Scale AR Apps With ScapeKit

When you mention "Augmented Reality" to anybody outside the AR industry, often they reply with "ah, like Pokemon Go!". Undoubtedly Pokemon Go is, so far, the product that has introduced the largest number of members of the general public to the concept of Augmented Reality, albeit in its first iteration it didn't use advanced AR.

Pokemon Go gave us a first glimpse into how much AR can captivate audiences, even if it was using a more primitive form of AR.

Pokemon Go gave us a first glimpse into how much AR can captivate audiences, even if it was using a more primitive form of AR.

Pokemon Go gave us a first glimpse into how much AR can captivate audiences, even if it was using a more primitive form of AR.One of the reasons the original iteration of Pokemon Go didn't fully capitalise on the promise of large-scale AR was that it used the GPS to loosely position content, and the GPS signal has as a maximum precision radius of around 4 metres (when you're lucky).

In practice, however, the accuracy of GPS is much worse, especially in city settings: buildings, trees, tunnels, clothing, and humans can prevent GPS signals from the satellites reaching the receiver making accuracy way lower!

For AR, such precision is not enough, so we need to look elsewhere for our precise positioning needs.

Now it's our turn 💪

Like me, many developers have since wanted to create something similar but which gets closer to delivering the full promise of large-scale AR: accurately positioned content which consistently appears anchored to the right spot in the real world. This can only be achieved if the positioning system used provides centimetre-accurate geolocation measurements.

One such positioning product is an SDK from the company Scape Technologies called 'ScapeKit', which provides reliable, & accurate geolocation measurements using Computer Vision.

Having worked with Scape's SDK in the past, I was very excited to hear they had released a new version of ScapeKit which abstracts away much of the geolocation to 3D space calculations I previously had to do manually and introduced many performance improvements to the localisation routines. As you can imagine, I immediately wanted to try it out in a real-world scenario!

But what to build? 🧐

In order to experiment with the new SDK & large-scale AR more generally, I decided to build a simple but effective AR Gardening app. The application allows users to plant sunflowers anywhere in the world and, thanks to persistence, to find them again exactly where they had planted. I also added some playful mechanics to demonstrate real-time, multi-user interaction at scale.

For example, if a sunflower is not watered for 24h it will shrivel up until it is watered again. In addition, every 24h the sunflowers will grow by one step, up to the final, fully-blossomed stage. All users can see these changes and are able to interact with the sunflowers in real-time.

Of course, I have demo videos of the app in action, but I show them towards the end of the article: that way we will have built up knowledge around why we should make certain design decisions when building for AR at scale and by then the videos will make much more sense.

In this article, I will talk about how you can use ScapeKit to build large-scale, accurately-positioned AR apps as I did for my sample AR Gardening app.

The goal is to pass on as much knowledge and best practices as possible, which I accumulated empirically (no textbooks or how-tos here, until now!), so that you can get started more quickly and build on top of those findings.

Without further ado, let's get going! Note: Throughout the whole article, "AR" stands for Augmented Reality

Requirements for large-scale AR apps

First, we must start our journey by planning ahead: what features do we want to include in our large-scale AR experience? To replicate the interactive, multiplayer and real-time AR Gardening app the list is:

- The content is displayed in AR

- The content is persisted

- Users of the app can place content in the real world themselves

- Nearby users receive updates in real-time

- Multiple users can interact in real-time

Let's look at these requirements in more detail.

Display our content in AR

Obviously, we want to display our 3D content in Augmented Reality. To achieve this I used SceneKit + ARKit, but you can go with ARCore + SceneForm if you're on Android, or Unity and Unreal Engine for multiplatform support.

Using these frameworks makes it very easy to cover our requirement that users should be able to place the 3D content themselves since through these APIs it's very easy to implement such functionality.

Content is persisted

"Persistent content" means that the same 3D AR content can be found exactly where it was placed, at two different time points. i.e. if we place an AR interactive Ice-Cream stand at a specific road corner, we want to find it on the exact same spot where we've left it when we come back to that area to launch our AR app again.

Scape Technologies' Visual Positioning system in action. It even works at night! ©Scape Technologies Ltd

Scape Technologies' Visual Positioning system in action. It even works at night! ©Scape Technologies Ltd

To achieve persistence we will use ScapeKit for iOS, which gives us extremely accurate geopositioning measurements (i.e. accurate Latitude, Longitude and orientation) and handles conversion from geocoordinates to Euclidian space coordinates for us to display our content in the 3D scene.

Real-time updates

When users interact with AR content, any changes made should be reflected in real-time for anybody observing that scene.

For example, in the AR Gardening app I developed, it's possible to add 1 artificial day of growth to sunflowers you planted. This has the effect of playing an animation of the flower growing up, and such change is reflected both in the shared data structures and the 3D content for all the other users, in real-time.

We will cover in a later section what to use to achieve data consistency, real-time updates and interactions.

How do we get there? 🛣

Now that we have a clear idea of what we want to achieve, we need to get there step by step so that we actually understand what's going on under the hood.

From Latitude and Longitude to Euclidean space

All large-scale AR applications have to deal with geocoordinates (e.g. Latitude and Longitude), so this is a good topic to start from. If you're serious about building large-scale AR apps it's fundamental to have a grasp of what the positioning system of your choice does under the hood so that you can debug possible issues with confidence.

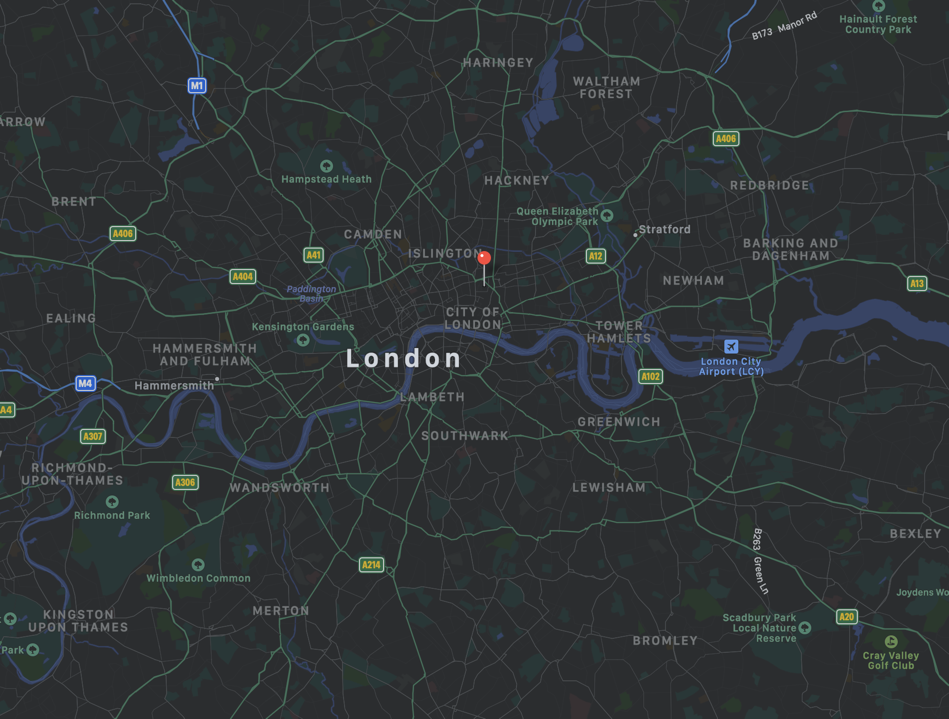

When we want to represent the position of something in the real world, outdoors, we assign it a Latitude and Longitude, e.g.: 51.524015, -0.082064.

Map of London showing the location of the geocoordinates 51.524015, -0.082064

Map of London showing the location of the geocoordinates 51.524015, -0.082064

However, typically 3D scene graph systems (like SceneKit, SceneForm, Unity etc.) don't natively work with longitude or latitude. Instead, they work in Euclidian space, so we need to convert from geocoordinates to Euclidian coordinates in order to show the content.

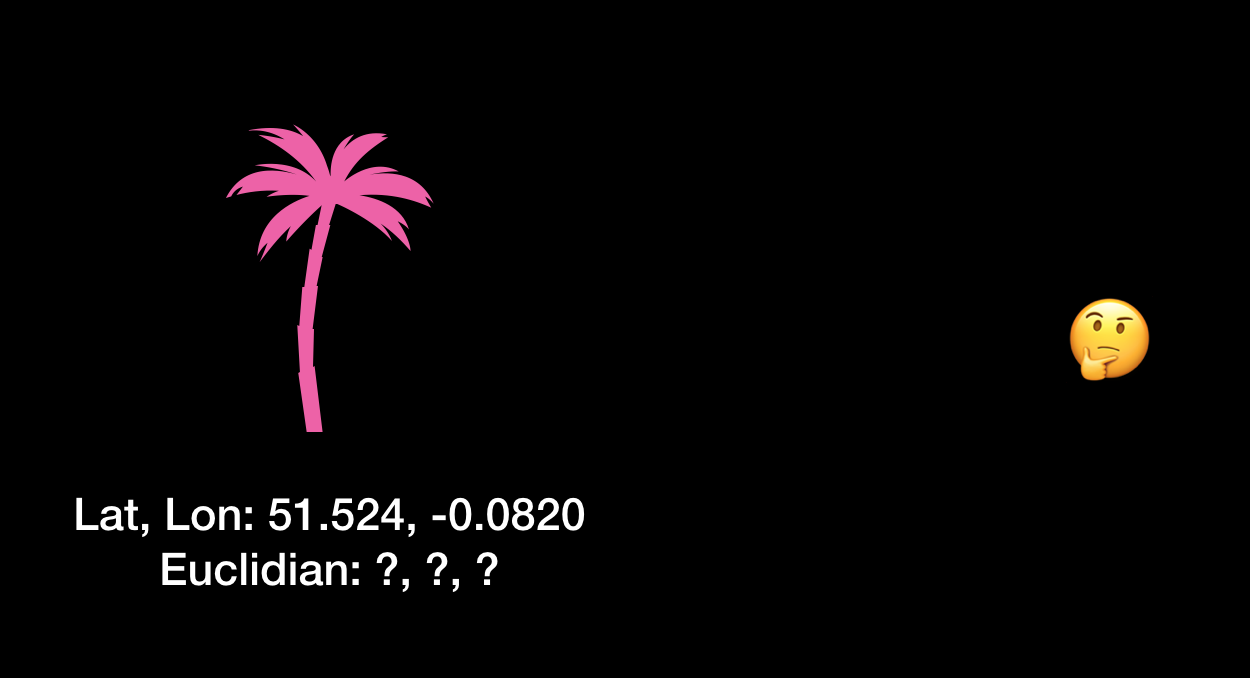

We know where our object (in this case a plant) should be placed in the world, but what is its coordinate in Euclidian space?

We know where our object (in this case a plant) should be placed in the world, but what is its coordinate in Euclidian space?

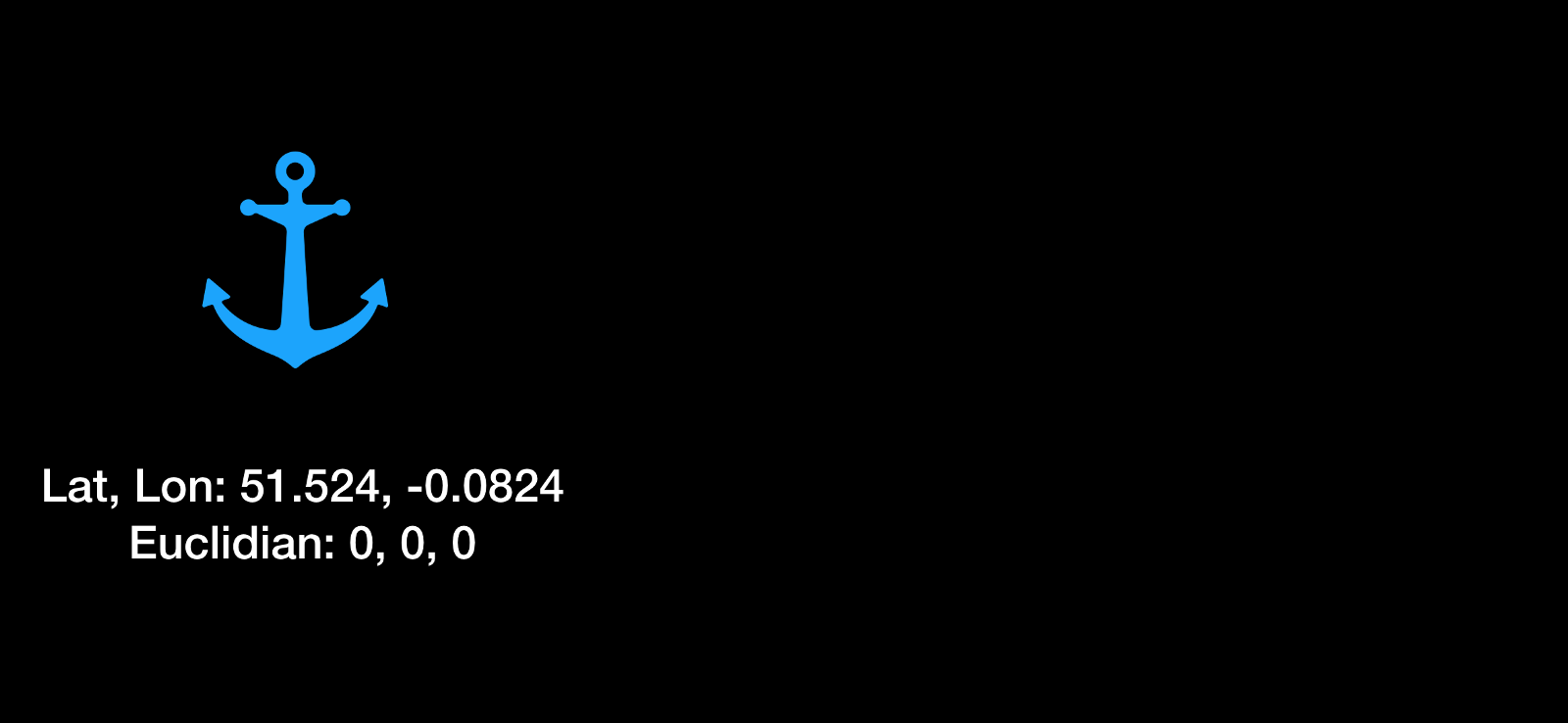

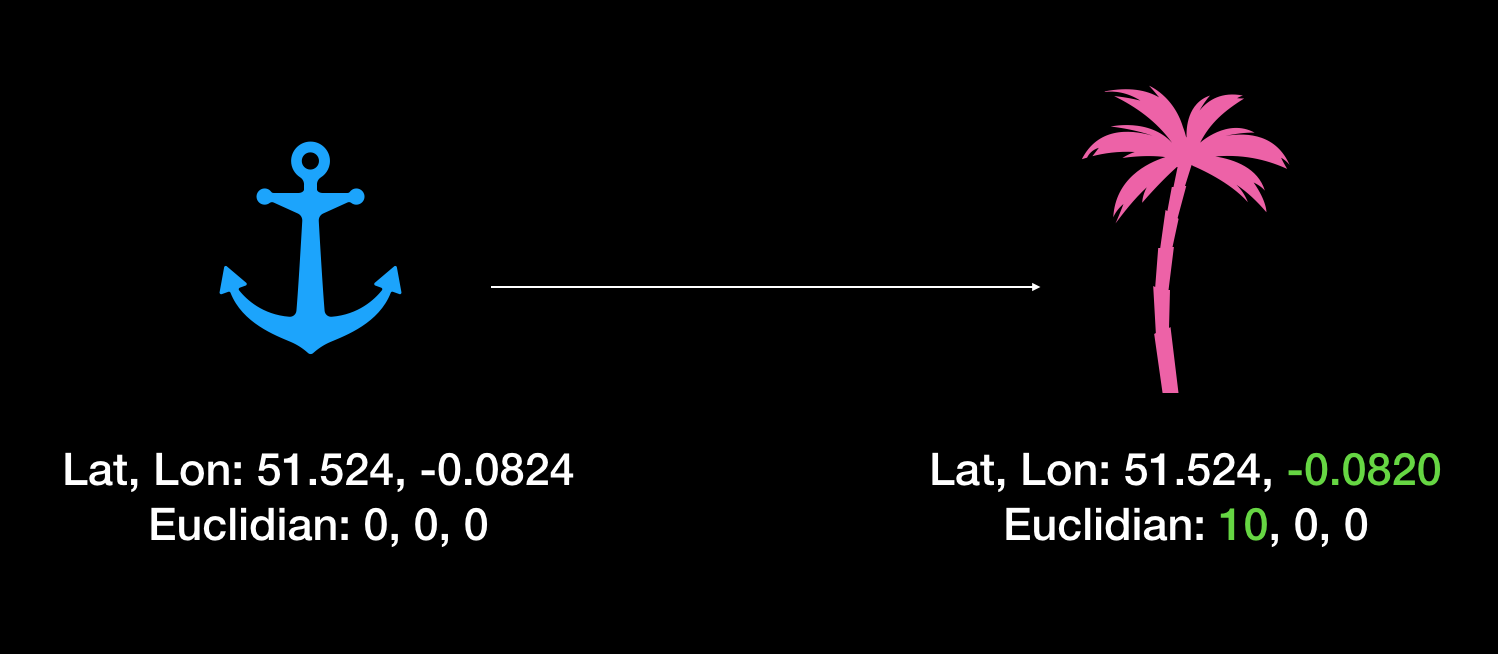

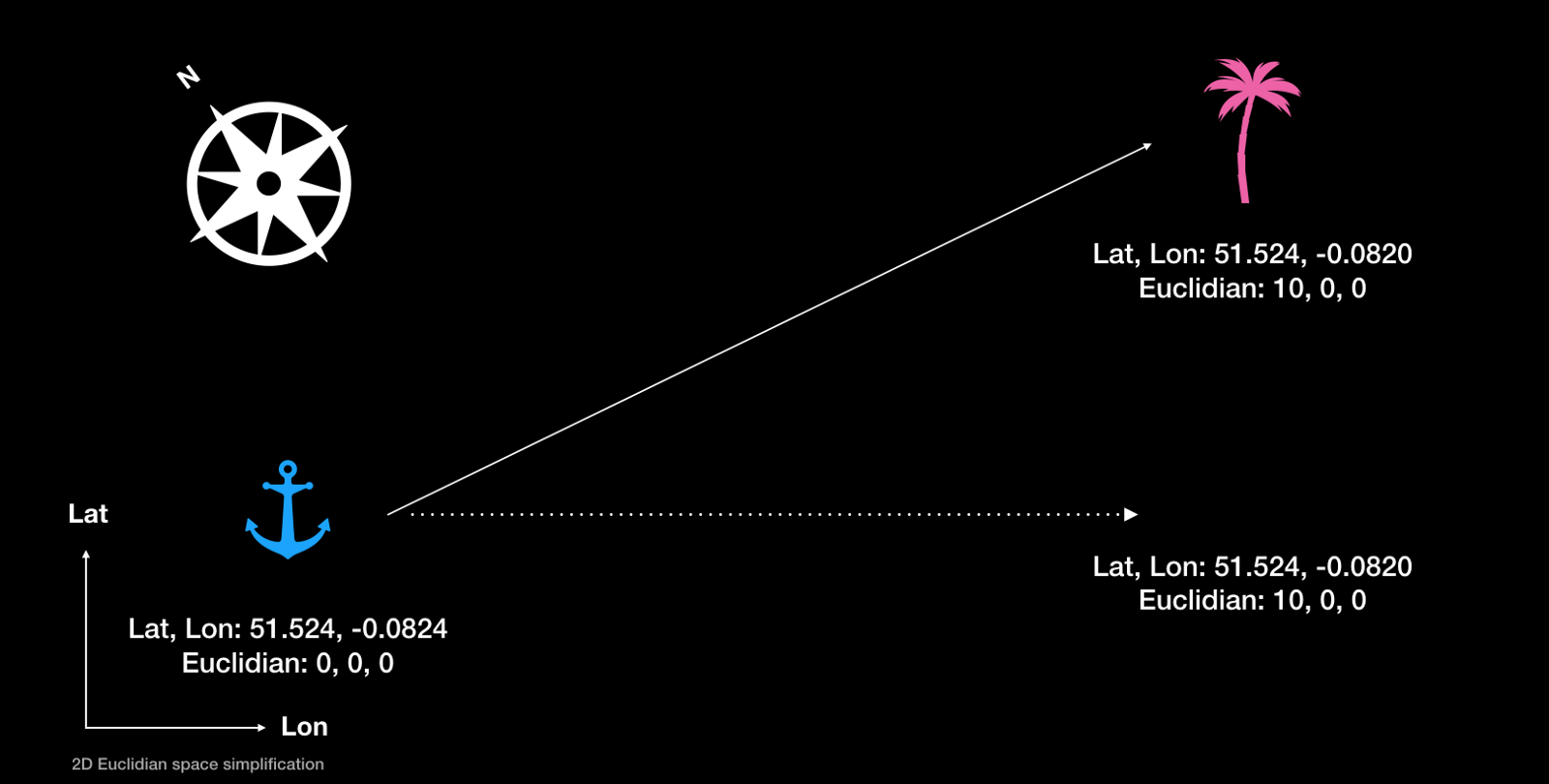

One common way to do this is to use a point in Euclidian space as the "scene anchor" and to relate to it the geocoordinates measured via our geopositioning system, in this case, ScapeKit. This means that once we have started up the AR and scenegraph system of your choice, we take a geolocation measurement, save it and assign it to a node in the scene. We call this node the "anchor" since all the content will be attached to it.

We assign the measured geocoordinate to a point in Euclidian space, in this case, the scene world-space origin.

We assign the measured geocoordinate to a point in Euclidian space, in this case, the scene world-space origin.

This way we can now display a 3D model at a particular location by interpolating the Euclidian position of the anchor with respect to the displacement of the model to the anchor node in geocoordinates:

The Euclidian space position of the plant will be some displacement from the anchor node, directly proportional to the displacement of the plant to the anchor in geocoordinates.

The Euclidian space position of the plant will be some displacement from the anchor node, directly proportional to the displacement of the plant to the anchor in geocoordinates.

Easy, right? Not really…

The North matters

Obtaining an accurate heading is crucial. Because mobile device compasses are typically accurate to plus or minus 30 degrees, the whole 3D scene could be rotated incorrectly around the scene anchor, resulting in content being misplaced, even though our geocoordinates to Euclidian space calculations are correct. This is why it's imperative that any positioning system provides a highly accurate reading of where 'North' really is.

An inaccurate North reading can result in the content being rotated around the origin of the scene, hence appearing in the wrong place. The dotted line represents where we expected the plant to appear.

An inaccurate North reading can result in the content being rotated around the origin of the scene, hence appearing in the wrong place. The dotted line represents where we expected the plant to appear.

Accurate geolocation matters even more!

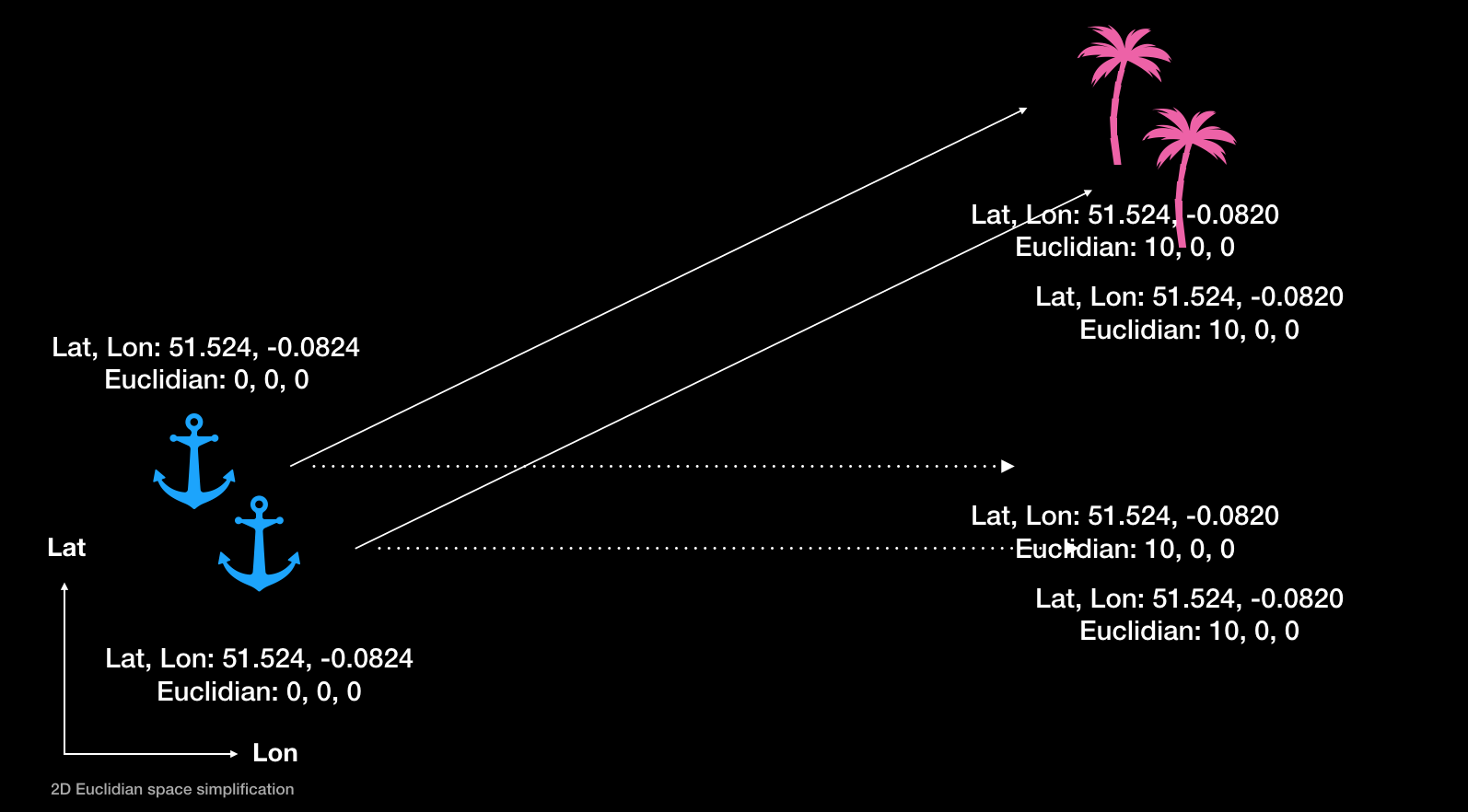

In the same way that accurate heading plays a crucial role in the correct functioning of large-scale AR apps, another even more important factor is accurate geopositioning.

If your positioning system doesn't give you accurate, consistent latitude and longitude readings, the whole of your 3D scene is displaced by some amount each time the user launches the app. Combine that with a maybe inaccurate heading reading, and suddenly your content is all over the place instead of where it should be!

A combination of poor geolocation and heading readings can lead to the whole scene being badly misplaced. Your users won't be happy…

A combination of poor geolocation and heading readings can lead to the whole scene being badly misplaced. Your users won't be happy…

The GPS can give us readings only with a confidence radius of a minimum of a couple of metres (when we're lucky), which is way too imprecise for AR content persistence. Couple that with an inaccurate heading reading from the magnetometer (which goes crazy in an urban environment) and you can see why having an accurate positioning system is fundamental to develop good large-scale AR apps.

To drive the point home, imagine we're using GPS + Magnetometer combo to get readings for our scene anchor, and that our conversion calculations are sound. Now say we want to display an advertisement for Starbucks in front of a specific one of its stores, so we set our 3D content to have a specific geolocation and all is well so far. The issues arise when our users use our app and try and see the content where it should be: however due to the imprecision of our positioning system the content will appear in different places every time a user uses our app! What if the content appeared in front of a competitor cafe nearby instead? 👀

But worry not, it's our lucky day! 🎉

Enter ScapeKit

We now know all the positioning challenges that come from developing large-scale AR apps so you're ready to learn that actually you don't have to worry about them that much. ScapeKit is an SDK for large-scale geo-based AR which handles all the challenges discussed above:

- It gives accurate, consistent geolocation and heading readings

- It handles the conversions from latitude and longitude to Euclidian space

- It integrates with your scenegraph system of choice, i.e. SceneKit

That means that all we have to do is pass ScapeKit a geolocation and it will take care of giving back to you an object we can use to place content in your 3D scene at that location.

You can grab ScapeKit here

Now for some fun

Armed with the knowledge of what's happening under the hood of ScapeKit, let's move on to actually build the content for our AR app.

Storing the data

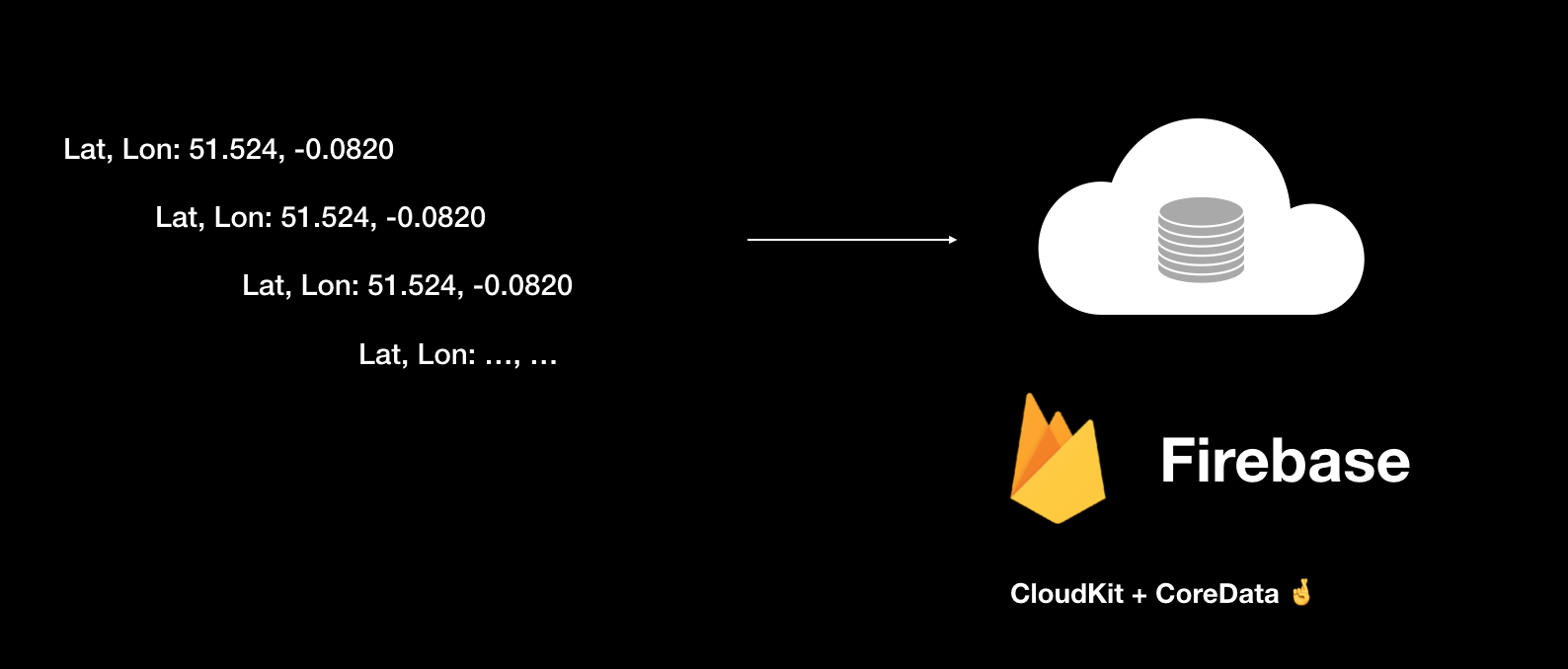

One of the first things we'll want to do is show multiple objects in the 3D scene, and to make them persistent. This means we will have a bunch of geolocations as such:

51.524, -0.0820

51.524, -0.0820

51.524, -0.0820

51.524, -0.0820

...

To do so we'll need a way to store their geolocation (and other information) and to make such data accessible by multiple users at the same time.

We save the position of our objects to Firebase.

We save the position of our objects to Firebase.

Remember that we also want changes made to the scene to be reflected in real-time to all nearby users!

Out of the various realtime database solutions out there, I find Firebase to be the most accessible and performant one, so Firebase is the database system I suggest you use and the one I used in the AR Gardening app. It's well documented, it has SDKs for all the most popular programming platforms/languages and, most of all, it fits all of our requirements:

- It's fast

- It's real-time

- Changes made by one user are immediately reflected across all users

When our users make changes to the app all you have to do is update the relative database document (to use Firebase's terminology) and all users will receive the update in real-time provided they're connected to the internet.

I'm not going to explain how to setup Firebase here since it's very well documented on their website

Another important thing to keep in mind when using Firebase is to make it the source of truth for our data: we don't want to have to worry about which of our users have the right data at any given time, so making Firebase the authority on what the data is like at any given time solves this problem.

What should we store in the database?

That's very much up to us and what you want our application to do.

However, there are some guidelines that we should follow which apply well to all large-scale AR applications. At the very least we should store:

- The geolocation of the object (I.e. its latitude and longitude)

- The creation timestamp

- The timestamp for the when the object was last modified

- The ID of the owner/creator

Even if we won't need all of these fields in the first version of our app, chances are we will in version 2 and higher so it's a good idea to set up the database to handle them from the get-go. This way you won't have to reset the database for our existing users when you update the application and want to support more / different fields.

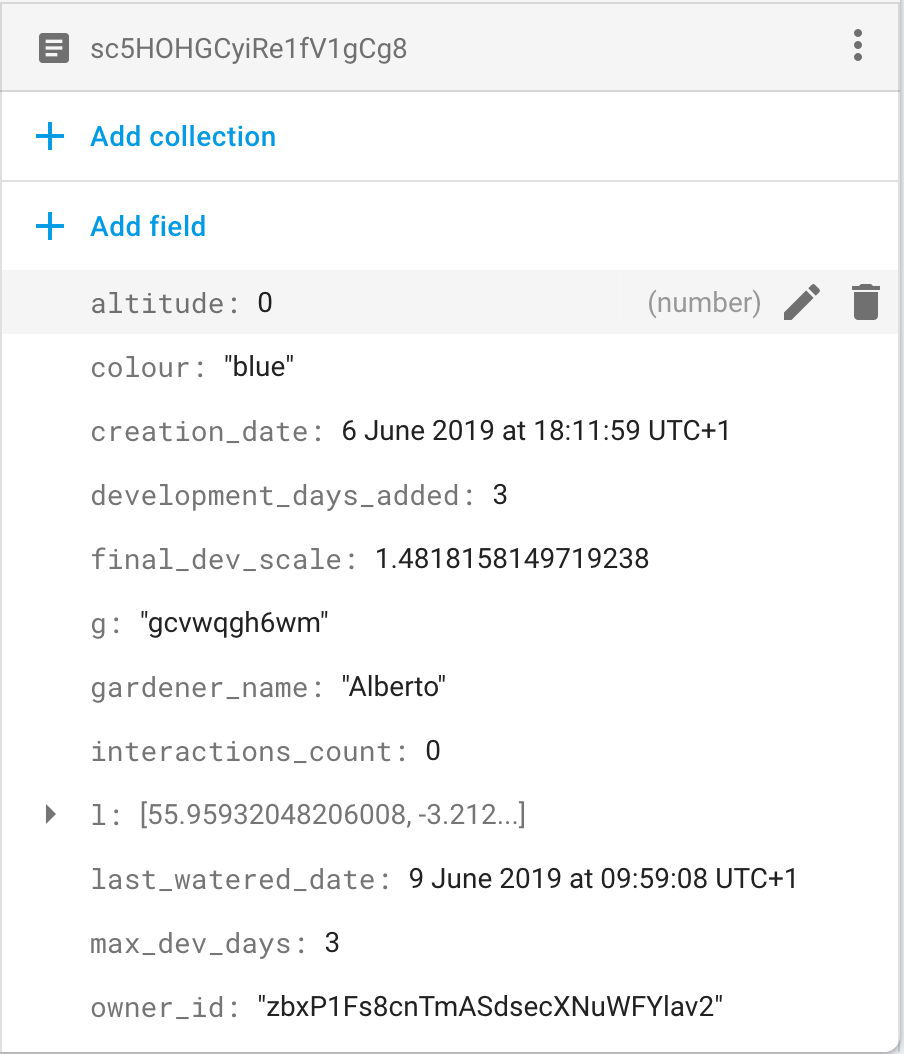

As an example database structure, here's a screenshot captured from the Firebase console of my AR Gardening project:

A screenshot of the data in the database for my AR Gardening app.

A screenshot of the data in the database for my AR Gardening app.

It includes the fields I talked about above (although in my case the "last modified timestamp" is represented by the field "last_watered_date") and additional ones I need to support the functionalities of my application.

Important considerations when using Firebase

There are two important considerations we should keep in mind when using Firebase in our projects: structuring our data and eliminating lag.

Planning the structure of the data in advance allows us to avoid situations in which we have to reset the database for all of our users. Obviously, there will be times when we will need to bite the bullet and update/add fields of our database documents, so the second best thing you can do is make sure to structure the data in a way that allows for easy expansion and migration.

Lag compensation means that when the user performs an interaction, the visual feedback for it should happen immediately instead of waiting for the data authority (in our case, Firebase) to reply with the new data before showing the visual feedback.

Let's explore an example to better understand this concept: Say we allow our users to tap on the screen to place something. When that happens, we need to do two things: show the new object being placed for the user who performed the tap, and show it to everybody else who's nearby. A quick solution would be to show a new object only when the data authority (i.e. Firebase) tells the app there's new data to be displayed. This means that the logic to display a new object in the scene would be unified for both the user performing the interaction and the nearby users. However this represents an issue for the user performing the interaction: she expects the visual feedback to happen immediately but instead, she'll have to wait for the app to first send the data to the server and for the server to reply "all good, here's new data".

The solution is lag compensation: we will perform the interaction immediately for the user performing it, as if there weren't an authoritative server to communicate with. We then send the data to the server for storage and when the server replies with the new data, we check whether it's different from what we expect. If not, we ignore it; if yes, we correct what we had already displayed on screen. For our simple app, most of the time the data authority will reply with the data we expect, hence allowing us to achieve lag compensation very nicely.

If you want to learn more about it, this is a good starting point. Google is also your friend for this.

Bottom line: make sure you implement some form of lag compensation!

Filtering objects

At this point, we have a way to store the data for the objects in our scene, most importantly their geo-coordinates. Having combined that data with ScapeKit and your scene graph engine of choice we display them at their correct location in our AR scenes and all is well.

However what happens when someone places an object in, say, New York? Should our users in London be able to see such an object? Even better, should their app even be notified that such an object exists? Most of the time the answer is no.

What we want to do is hence to filter the objects visible to a given user based on her geocoordinates. This way we also avoid potentially having every instance of our app download the whole dataset, which could become very large quickly if our app goes viral!

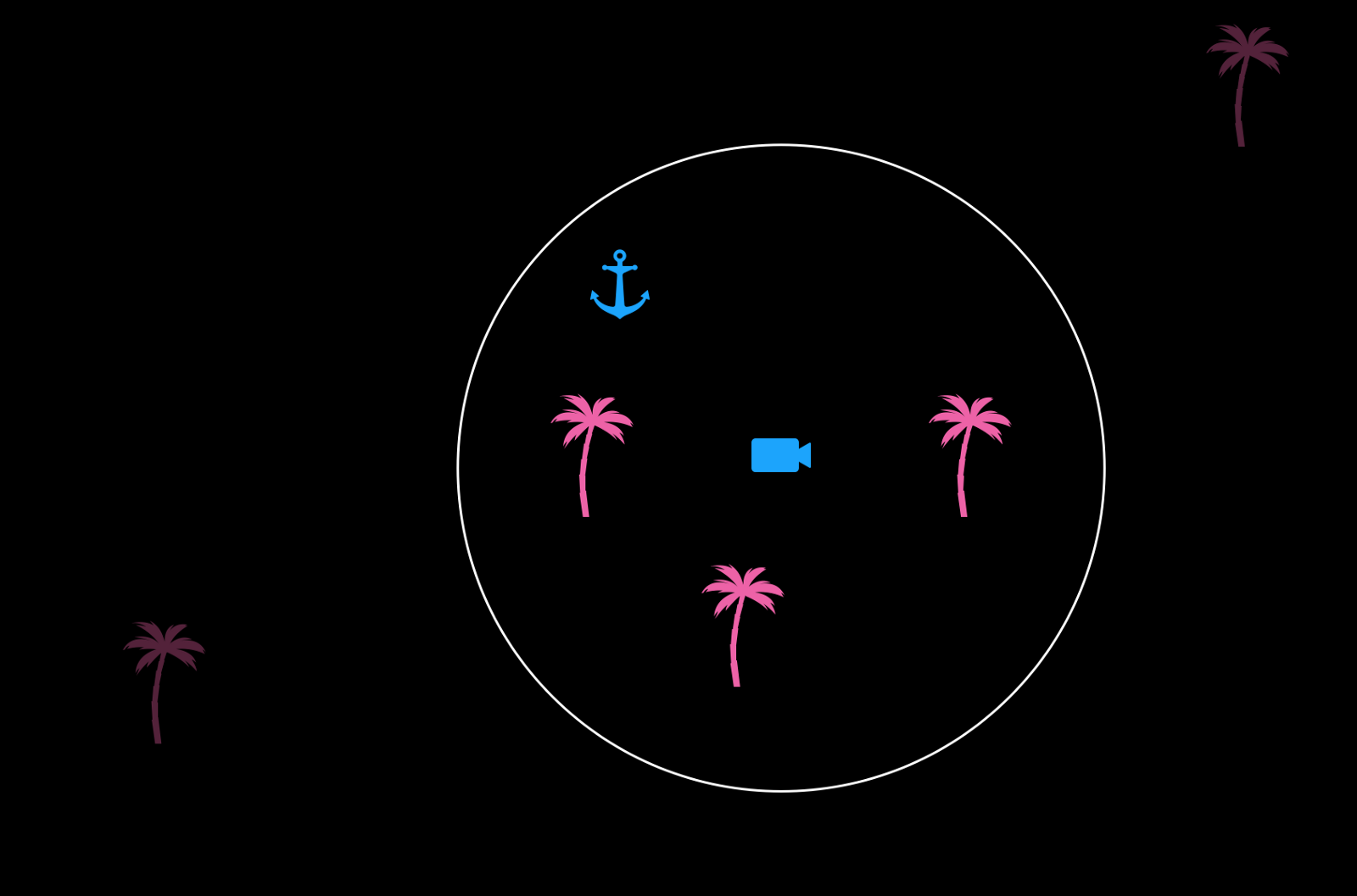

This diagram shows a top-down view of the 3D scene. The camera symbol represents the position of the user, with the filtering area around it.

This diagram shows a top-down view of the 3D scene. The camera symbol represents the position of the user, with the filtering area around it.

Another important reason to perform location-based filtering is that since we don't (yet) have occlusion from every building, displaying objects which are too far from the user would break the illusion of AR since such objects would appear in front of buildings whereas they should be behind them, invisible.

If you're on iOS, I recommend you use GeoFirestore-iOS to perform location-based filtering. It integrates well with Firebase's Firestore database and it's easy to use. You can find out more about it here.

Setting the ground level

Our scene content should be placed on the street level, i.e. on the ground. Therefore we need to use our AR system of choice's plane estimation feature to "read" the ground level and use that measurement to place content on top of it correctly. This is because currently, ScapeKit doesn't provide an altitude reading which is accurate enough to set the height of objects with respect to that measurement.

Failing to set the ground level would result in our content "floating" above the ground in an unnatural way.

An important lesson I learnt, which I cannot emphasize enough, is that you should make setting this up as easy and as intuitive as you can for the user. AR is, after all, still a relatively unknown technology to the general public and many people will not be familiar with the concept of "scanning the ground", let alone AR itself. I initially had many instances of users reporting that the content was floating, because I hadn't made this as easy as it should have been.

For example a good animation goes much further than a line of text in explaining what to do, but what I found most effective to lead the user to scan the ground correctly was to use the FocusNode repository by Max Fraser Cobb. The focus node makes it very easy for the user to understand what's happening and when the content has been scanned sufficiently. For reference, here's a video I took of my AR Gardening app of such onboarding flow in action:

Make sure you engage the user when letting them set the ground level.

Make sure you engage the user when letting them set the ground level.

If there's one thing you should take away from this section is that you should spend a lot of time getting this part of the onboarding as smooth and as frictionless as possible. I can speak from experience when advising you to do so, so please do! If you don't the user will find it boring or think that your application is broken and never open the app again.

Give visual feedback

This ties in with what we've just talked about in terms of making the onboarding as accessible as we can.

Due to the nature of any localisation system and of the way any AR system first starts up, there will be times when the user is left to wait for either the localisation to happen, for network data to be delivered, or for the AR system to start up (even all three of them at the same time if you're unlucky).

Because of this, we must make sure that we display meaningful and well-thought animations to communicate to the user that work is being done in the background and that the app cannot proceed to the next stage unless the background task is complete. For example, most vision-based localization systems will have some delay between sending and receiving a measurement.

Showing a loading animation can be useful to hide any network lag.

Showing a loading animation can be useful to hide any network lag.

In the video above I am using a very simple animation, but it seems to be effective according to user feedback (you can find this and more 2D UI animations for iOS in the repository NVActivityIndicatorView).

It's very important that we listen to user feedback when implementing these onboarding features: it's easy to make assumptions about what works best but your users will definitely not like some of your solutions, so we should keep an open mind and follow their suggestions!

Allow users to restart the experience in-app

Sometimes the measurements are not as accurate as expected, and it can lead to the issues we discussed in the first part of the article. This can often be due to poor internet connection or other external factors.

Other times ARKit will not stabilise until late and will make your content "jump around" by moving the 3D scene world origin until it stabilises.

For both of these reasons, you should allow your users to restart the experience with ease. Two buttons at the top of the screen, one to restart the positioning system and the other to restart ARKit and take a new ground measurement, seemed to work fine for me. I experimented with menus and more advanced UI but users preferred the simplicity of two, always-visible buttons. This makes it also extremely easy for them to learn what to do in case the experience is not stable and needs to be restarted.

Here's what the UI in my AR Gardening app looks like at the top of the screen:

Left button: restart ARKit and take a new ground measurement. Right: Restart the positioning system.

Left button: restart ARKit and take a new ground measurement. Right: Restart the positioning system.

I recommend you have these buttons and that you plan how to teach your users about their functionality and importance. There will be times when they are needed, sometimes more than you think, due to ARKit not working as well as you'd expect when outdoors. So it's important your users know how to use them, or they will just quit your app!

📺 Finally the demos! 📺

I've teased you long enough, so here are two videos which show the complete flow of the AR Gardening app with localisation, placement of content, and finally relocalisation and the ability to find the content in the same spot again:

Localisation + Placement of content.

Localisation + Placement of content.

Finding the content again after relaunching the app.

Finding the content again after relaunching the app.

To prove that the app is interactive and multiuser, I have also filmed the same scene with two different phones; note that the phones are simply connected to the internet and not to each other directly. The same effect is replicated if they are not close to each other like in the following video:

Content updated on one device is immediately updated on all nearby devices.

Content updated on one device is immediately updated on all nearby devices.

Now, let's wrap this up!

The 1–2–3 of large-scale AR apps

Before we sum this up, the steps I recommend you should take when developing a large-scale AR application are:

- Quickly integrate database + geolocation system (e.g. ScapeKit), use basic geometry to create the first implementation

- Spend a lot of time getting the onboarding flow for your users right

- Polish the visuals, the interactions, the UI, etc

By far, step #2 is the most important one: if you don't spend enough time on it, your users will not use your app a second time and will think that it's broken.

I believe that at this stage one of the biggest barriers we have in the AR consumer market is educating users on how to use AR applications and making sure their experiences go as smoothly as possible.

In fact, Apple recently made available for developers a pre-built UI to onboard users in AR apps. They must have recognised the widespread struggle amongst developers in creating consistent onboarding experiences with AR and thought to come to our rescue.

Conclusions

I hope that armed with this information you'll be able to get started with large-scale AR apps quickly and that you can avoid going through some of the (sometimes very long) process of trial and error I went through.

In summary, you should:

- Account for hiccups in ARKit

- Make it dead easy to restart the experience in-app

- Store assets on the device, not the cloud

- Store data in the cloud

- Make the user onboarding your priority

Bonus 🎁

If you've made this far through the article, congrats! Here's a present for you.

When you think about it, our Firebase database doesn't really care where the data we write in it comes from. Until now we've talked about integrating Firebase with a mobile app, but what if the data came from somewhere else?

Say, a web app with a map view of where we want to place content remotely?

We could use a map view to place content remotely. Due to how we structured the mobile app, these would receive the updates in real-time just like if someone were placing the objects manually via the app.

We could use a map view to place content remotely. Due to how we structured the mobile app, these would receive the updates in real-time just like if someone were placing the objects manually via the app.

I, together with Danilo Pasquariello, experimented with this at the Scape Hackathon 2019, where we went as far as using WebVR in combination with a 3D map of London for even more precise positioning.

However, to keep things simple one could use a 2D map provider such as Mapbox or Google Maps and embed a map view in an interactive web app. The user would then be able to tap anywhere on the map, and our web app would save the geo-coordinates of the tap to the Firebase database just like our AR app would. The users of our AR app near the location of the tap could even see the object appearing and being changed in real time! This could enable a first, very cool version of large-scale remote content placement: you could place objects in London from New York 🧙♂️.

I think this idea has lots of potential and that it should be explored further by more developers!

Credits

Many thanks to the amazing Natalia Sionek for having animated the sunflower 3D model in record time! Make sure to check her out.

The 3D models used in the demo app are: https://poly.google.com/view/a9qW8wSfaaX https://poly.google.com/view/6ktZgxSVVn1 https://poly.google.com/view/3yzoWzrCgoS https://poly.google.com/view/87L4pG0oFMi https://poly.google.com/view/dNUS4xC0D6C

Many thanks to the creators of these assets!